When an Experiment Produces the Same Results Again and Again

Reproducibility, also known as replicability and repeatability, is a major principle underpinning the scientific method. For the findings of a written report to be reproducible means that results obtained by an experiment or an observational report or in a statistical analysis of a data set should be accomplished over again with a high degree of reliability when the study is replicated. In that location are different kinds of replication[one] but typically replication studies involve different researchers using the same methodology. Only after one or several such successful replications should a result be recognized as scientific knowledge.

With a narrower scope, reproducibility has been introduced in computational sciences: Whatsoever results should exist documented by making all data and code available in such a style that the computations tin be executed over again with identical results.

In contempo decades, at that place has been a ascension concern that many published scientific results fail the test of reproducibility, evoking a reproducibility or replicability crisis.

History [edit]

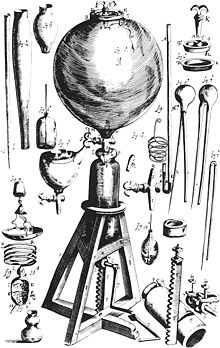

Boyle's air pump was, in terms of the 17th century, a complicated and expensive scientific apparatus, making reproducibility of results difficult

The first to stress the importance of reproducibility in science was the Irish gaelic pharmacist Robert Boyle, in England in the 17th century. Boyle's air pump was designed to generate and study vacuum, which at the fourth dimension was a very controversial concept. Indeed, distinguished philosophers such every bit René Descartes and Thomas Hobbes denied the very possibility of vacuum existence. Historians of science Steven Shapin and Simon Schaffer, in their 1985 book Leviathan and the Air-Pump, describe the argue between Boyle and Hobbes, ostensibly over the nature of vacuum, as fundamentally an statement nigh how useful cognition should be gained. Boyle, a pioneer of the experimental method, maintained that the foundations of knowledge should exist constituted by experimentally produced facts, which can exist fabricated conceivable to a scientific community by their reproducibility. By repeating the same experiment over and over once more, Boyle argued, the certainty of fact will emerge.

The air pump, which in the 17th century was a complicated and expensive apparatus to build, also led to 1 of the kickoff documented disputes over the reproducibility of a item scientific phenomenon. In the 1660s, the Dutch scientist Christiaan Huygens built his own air pump in Amsterdam, the first i outside the direct direction of Boyle and his assistant at the time Robert Hooke. Huygens reported an consequence he termed "anomalous suspension", in which h2o appeared to levitate in a glass jar inside his air pump (in fact suspended over an air bubble), but Boyle and Hooke could non replicate this phenomenon in their own pumps. As Shapin and Schaffer depict, "information technology became clear that unless the miracle could be produced in England with 1 of the two pumps available, and then no one in England would accept the claims Huygens had made, or his competence in working the pump". Huygens was finally invited to England in 1663, and under his personal guidance Hooke was able to replicate dissonant interruption of water. Following this Huygens was elected a Foreign Member of the Royal Society. Even so, Shapin and Schaffer also note that "the accomplishment of replication was dependent on contingent acts of judgment. One cannot write down a formula proverb when replication was or was not achieved".[2]

The philosopher of scientific discipline Karl Popper noted briefly in his famous 1934 volume The Logic of Scientific Discovery that "non-reproducible unmarried occurrences are of no significance to scientific discipline".[three] The statistician Ronald Fisher wrote in his 1935 book The Pattern of Experiments, which set the foundations for the modern scientific practice of hypothesis testing and statistical significance, that "nosotros may say that a phenomenon is experimentally demonstrable when nosotros know how to comport an experiment which volition rarely fail to give us statistically significant results".[4] Such assertions limited a mutual dogma in mod science that reproducibility is a necessary condition (although not necessarily sufficient) for establishing a scientific fact, and in practice for establishing scientific authority in whatsoever field of knowledge. Notwithstanding, equally noted higher up by Shapin and Schaffer, this dogma is non well-formulated quantitatively, such as statistical significance for instance, and therefore it is not explicitly established how many times must a fact be replicated to exist considered reproducible.

Terminology [edit]

Replicability and repeatability are related terms broadly or loosely synonymous with reproducibility (for example, among the general public), but they are frequently usefully differentiated in more precise senses, as follows.

Ii major steps are naturally distinguished in connexion with reproducibility of experimental or observational studies: When new data is obtained in the endeavour to achieve it, the term replicability is ofttimes used, and the new report is a replication or replicate of the original ane. Obtaining the aforementioned results when analyzing the data set of the original study again with the same procedures, many authors use the term reproducibility in a narrow, technical sense coming from its use in computational research. Repeatability is related to the repetition of the experiment within the same written report past the same researchers. Reproducibility in the original, broad sense is only best-selling if a replication performed by an contained researcher team is successful.

Unfortunately, the terms reproducibility and replicability sometimes appear even in the scientific literature with reversed pregnant,[5] [6] when researchers fail to enforce the more precise usage.

Measures of reproducibility and repeatability [edit]

In chemistry, the terms reproducibility and repeatability are used with a specific quantitative meaning.[vii] In inter-laboratory experiments, a concentration or other quantity of a chemical substance is measured repeatedly in unlike laboratories to assess the variability of the measurements. Then, the standard deviation of the difference between ii values obtained within the aforementioned laboratory is called repeatability. The standard deviation for the departure between two measurement from different laboratories is chosen reproducibility.[8] These measures are related to the more than general concept of variance components in metrology.

Reproducible enquiry [edit]

Reproducible research method [edit]

The term reproducible research refers to the idea that scientific results should be documented in such a way that their deduction is fully transparent. This requires a detailed description of the methods used to obtain the information[9] [10] and making the full dataset and the lawmaking to calculate the results easily attainable.[eleven] [12] [13] [14] [xv] [16] This is the essential office of open science.

To make any inquiry project computationally reproducible, general exercise involves all information and files existence clearly separated, labelled, and documented. All operations should be fully documented and automated as much equally practicable, avoiding transmission intervention where viable. The workflow should be designed as a sequence of smaller steps that are combined and then that the intermediate outputs from one footstep directly feed every bit inputs into the adjacent stride. Version control should be used as it lets the history of the project be hands reviewed and allows for the documenting and tracking of changes in a transparent fashion.

A basic workflow for reproducible research involves data acquisition, information processing and data analysis. Data conquering primarily consists of obtaining primary data from a primary source such as surveys, field observations, experimental research, or obtaining data from an existing source. Data processing involves the processing and review of the raw data collected in the get-go phase, and includes data entry, information manipulation and filtering and may exist done using software. The data should be digitized and prepared for data analysis. Information may be analysed with the use of software to translate or visualise statistics or data to produce the desired results of the research such as quantitative results including figures and tables. The utilize of software and automation enhances the reproducibility of research methods.[17]

There are systems that facilitate such documentation, like the R Markdown language[xviii] or the Jupyter notebook.[19] The Open Scientific discipline Framework provides a platform and useful tools to back up reproducible research.

Reproducible research in practice [edit]

Psychology has seen a renewal of internal concerns about irreproducible results (see the entry on replicability crunch for empirical results on success rates of replications). Researchers showed in a 2006 report that, of 141 authors of a publication from the American Psychological Association (APA) empirical articles, 103 (73%) did not respond with their data over a six-month period.[20] In a follow up study published in 2015, information technology was found that 246 out of 394 contacted authors of papers in APA journals did not share their data upon request (62%).[21] In a 2012 newspaper, it was suggested that researchers should publish data forth with their works, and a dataset was released alongside every bit a demonstration.[22] In 2017, an article published in Scientific Information suggested that this may non be sufficient and that the whole analysis context should exist disclosed.[23]

In economic science, concerns have been raised in relation to the credibility and reliability of published inquiry. In other sciences, reproducibility is regarded every bit fundamental and is often a prerequisite to research being published, however in economical sciences it is not seen as a priority of the greatest importance. Most peer-reviewed economic journals practise not take any noun measures to ensure that published results are reproducible, however, the top economic science journals have been moving to prefer mandatory data and code archives.[24] At that place is low or no incentives for researchers to share their data, and authors would have to bear the costs of compiling information into reusable forms. Economic research is often non reproducible as only a portion of journals have adequate disclosure policies for datasets and plan code, and even if they practice, authors oft do not comply with them or they are not enforced by the publisher. A Study of 599 articles published in 37 peer-reviewed journals revealed that while some journals accept accomplished significant compliance rates, significant portion have only partially complied, or not complied at all. On an article level, the average compliance rate was 47.5%; and on a journal level, the average compliance charge per unit was 38%, ranging from 13% to 99%.[25]

A 2018 report published in the journal PLOS I institute that 14.4% of a sample of public health researchers had shared their data or lawmaking or both.[26]

In that location have been initiatives to improve reporting and hence reproducibility in the medical literature for many years, beginning with the Consort initiative, which is now part of a wider initiative, the EQUATOR Network. This group has recently turned its attention to how better reporting might reduce waste in research,[27] specially biomedical inquiry.

Reproducible research is key to new discoveries in pharmacology. A Phase I discovery will be followed by Phase Two reproductions as a drug develops towards commercial production. In recent decades Stage II success has fallen from 28% to eighteen%. A 2011 report found that 65% of medical studies were inconsistent when re-tested, and only half dozen% were completely reproducible.[28]

Noteworthy irreproducible results [edit]

Hideyo Noguchi became famous for correctly identifying the bacterial agent of syphilis, but also claimed that he could culture this amanuensis in his laboratory. Nobody else has been able to produce this latter result.[29]

In March 1989, University of Utah chemists Stanley Pons and Martin Fleischmann reported the product of excess rut that could only be explained by a nuclear process ("common cold fusion"). The written report was astounding given the simplicity of the equipment: information technology was essentially an electrolysis prison cell containing heavy water and a palladium cathode which rapidly absorbed the deuterium produced during electrolysis. The news media reported on the experiments widely, and information technology was a front-page item on many newspapers effectually the world (see science by press briefing). Over the next several months others tried to replicate the experiment, but were unsuccessful.[thirty]

Nikola Tesla claimed as early equally 1899 to accept used a high frequency current to light gas-filled lamps from over 25 miles (xl km) abroad without using wires. In 1904 he built Wardenclyffe Tower on Long Island to demonstrate means to send and receive power without connecting wires. The facility was never fully operational and was non completed due to economic problems, and then no attempt to reproduce his starting time consequence was ever carried out.[31]

Other examples which contrary show has refuted the original claim:

- Stimulus-triggered acquisition of pluripotency, revealed to be the result of fraud

- GFAJ-1, a bacterium that could purportedly comprise arsenic into its Dna in place of phosphorus

- MMR vaccine controversy – a study in The Lancet challenge the MMR vaccine acquired autism was revealed to be fraudulent

- Schön scandal – semiconductor "breakthroughs" revealed to be fraudulent

- Ability posing – a social psychology phenomenon that went viral after existence the field of study of a very popular TED talk, just was unable to be replicated in dozens of studies[32]

See also [edit]

- Metascience

- Accuracy

- ANOVA guess R&R

- Contingency

- Corroboration

- Reproducible builds

- Falsifiability

- Hypothesis

- Measurement incertitude

- Pathological science

- Pseudoscience

- Replication (statistics)

- Replication crunch

- ReScience C (periodical)

- Retraction#Notable retractions

- Tautology

- Testability

- Verification and validation

References [edit]

- ^ Tsang, Eric W. Thou.; Kwan, Kai-human (1999). "Replication and Theory Development in Organizational Science: A Critical Realist Perspective". Academy of Direction Review. 24 (4): 759–780. doi:x.5465/amr.1999.2553252. ISSN 0363-7425.

- ^ Steven Shapin and Simon Schaffer, Leviathan and the Air-Pump, Princeton Academy Printing, Princeton, New Jersey (1985).

- ^ This citation is from the 1959 translation to English language, Karl Popper, The Logic of Scientific Discovery, Routledge, London, 1992, p. 66.

- ^ Ronald Fisher, The Design of Experiments, (1971) [1935](9th ed.), Macmillan, p. fourteen.

- ^ Barba, Lorena A. (2018). "Terminologies for Reproducible Enquiry" (PDF). arXiv:1802.03311 . Retrieved 2020-x-15 .

- ^ Liberman, Mark. "Replicability vs. reproducibility — or is it the other manner round?". Retrieved 2020-x-fifteen .

- ^ "IUPAC - reproducibility (R05305)". International Matrimony of Pure and Practical Chemical science . Retrieved 2022-03-04 .

- ^ Subcommittee E11.twenty on Test Method Evaluation and Quality Control (2014). "Standard Practice for Use of the Terms Precision and Bias in ASTM Test Methods". ASTM International. ASTM E177. (subscription required)

- ^ King, Gary (1995). "Replication, Replication". PS: Political Science and Politics. 28 (3): 444–452. doi:10.2307/420301. ISSN 1049-0965. JSTOR 420301.

- ^ Kühne, Martin; Liehr, Andreas W. (2009). "Improving the Traditional Information Direction in Natural Sciences". Data Scientific discipline Periodical. 8 (ane): xviii–27. doi:10.2481/dsj.viii.18.

- ^ Fomel, Sergey; Claerbout, Jon (2009). "Guest Editors' Introduction: Reproducible Research". Computing in Science and Engineering. 11 (1): 5–7. Bibcode:2009CSE....11a...5F. doi:ten.1109/MCSE.2009.14.

- ^ Buckheit, Jonathan B.; Donoho, David Fifty. (May 1995). WaveLab and Reproducible Research (PDF) (Report). California, United states: Stanford University, Department of Statistics. Technical Report No. 474. Retrieved five January 2015.

- ^ "The Yale Law School Circular Table on Data and Cadre Sharing: "Reproducible Enquiry"". Computing in Scientific discipline and Engineering. 12 (5): 8–12. 2010. doi:10.1109/MCSE.2010.113.

- ^ Marwick, Ben (2016). "Computational reproducibility in archaeological research: Basic principles and a example study of their implementation". Journal of Archaeological Method and Theory. 24 (2): 424–450. doi:10.1007/s10816-015-9272-9. S2CID 43958561.

- ^ Goodman, Steven N.; Fanelli, Daniele; Ioannidis, John P. A. (1 June 2016). "What does inquiry reproducibility mean?". Scientific discipline Translational Medicine. viii (341): 341ps12. doi:ten.1126/scitranslmed.aaf5027. PMID 27252173.

- ^ Harris J.M; Johnson K.J; Combs T.B; Carothers B.J; Luke D.A; Wang 10 (2019). "Three Changes Public Health Scientists Can Brand to Aid Build a Civilisation of Reproducible Research". Public Wellness Rep. Public Health Reports. 134 (2): 109–111. doi:10.1177/0033354918821076. ISSN 0033-3549. OCLC 7991854250. PMC6410469. PMID 30657732.

- ^ Kitzes, Justin; Turek, Daniel; Deniz, Fatma (2018). The exercise of reproducible research instance studies and lessons from the information-intensive sciences. Oakland, California: University of California Printing. pp. nineteen–30. ISBN9780520294745. JSTOR ten.1525/j.ctv1wxsc7.

- ^ Marwick, Ben; Boettiger, Carl; Mullen, Lincoln (29 September 2017). "Packaging data analytical work reproducibly using R (and friends)". The American Statistician. 72: 80–88. doi:ten.1080/00031305.2017.1375986. S2CID 125412832.

- ^ Kluyver, Thomas; Ragan-Kelley, Benjamin; Perez, Fernando; Granger, Brian; Bussonnier, Matthias; Frederic, Jonathan; Kelley, Kyle; Hamrick, Jessica; Grout, Jason; Corlay, Sylvain (2016). "Jupyter Notebooks–a publishing format for reproducible computational workflows" (PDF). In Loizides, F; Schmidt, B (eds.). Positioning and Ability in Academic Publishing: Players, Agents and Agendas. 20th International Briefing on Electronic Publishing. IOS Press. pp. 87–90. doi:10.3233/978-1-61499-649-ane-87.

- ^ Wicherts, J. Yard.; Borsboom, D.; Kats, J.; Molenaar, D. (2006). "The poor availability of psychological research information for reanalysis". American Psychologist. 61 (7): 726–728. doi:10.1037/0003-066X.61.seven.726. PMID 17032082.

- ^ Vanpaemel, W.; Vermorgen, Chiliad.; Deriemaecker, Fifty.; Storms, G. (2015). "Are nosotros wasting a skillful crisis? The availability of psychological research data afterward the storm". Collabra. 1 (1): one–5. doi:10.1525/collabra.thirteen.

- ^ Wicherts, J. M.; Bakker, M. (2012). "Publish (your information) or (let the data) perish! Why not publish your data too?". Intelligence. twoscore (2): 73–76. doi:x.1016/j.intell.2012.01.004.

- ^ Pasquier, Thomas; Lau, Matthew Thou.; Trisovic, Ana; Boose, Emery R.; Couturier, Ben; Crosas, Mercè; Ellison, Aaron M.; Gibson, Valerie; Jones, Chris R.; Seltzer, Margo (5 September 2017). "If these information could talk". Scientific Information. four: 170114. Bibcode:2017NatSD...470114P. doi:10.1038/sdata.2017.114. PMC5584398. PMID 28872630.

- ^ McCullough, Bruce (March 2009). "Open Access Economics Journals and the Market for Reproducible Economic Research". Economic Analysis and Policy. 39 (1): 117–126. doi:10.1016/S0313-5926(09)50047-1.

- ^ Vlaeminck, Sven; Podkrajac, Felix (2017-12-10). "Journals in Economic Sciences: Paying Lip Service to Reproducible Research?". IASSIST Quarterly. 41 (1–four): xvi. doi:ten.29173/iq6. hdl:11108/359.

- ^ Harris, Jenine K.; Johnson, Kimberly J.; Carothers, Bobbi J.; Combs, Todd B.; Luke, Douglas A.; Wang, Xiaoyan (2018). "Employ of reproducible enquiry practices in public wellness: A survey of public health analysts". PLOS ONE. thirteen (9): e0202447. Bibcode:2018PLoSO..1302447H. doi:10.1371/journal.pone.0202447. ISSN 1932-6203. OCLC 7891624396. PMC6135378. PMID 30208041.

- ^ "Research Waste/EQUATOR Conference | Research Waste". researchwaste.net. Archived from the original on 29 October 2016.

- ^ Prinz, F.; Schlange, T.; Asadullah, M. (2011). "Believe it or non: How much tin we rely on published information on potential drug targets?". Nature Reviews Drug Discovery. 10 (9): 712. doi:10.1038/nrd3439-c1. PMID 21892149.

- ^ Tan, SY; Furubayashi, J (2014). "Hideyo Noguchi (1876-1928): Distinguished bacteriologist". Singapore Medical Periodical. 55 (10): 550–551. doi:10.11622/smedj.2014140. ISSN 0037-5675. PMC4293967. PMID 25631898.

- ^ Browne, Malcolm (3 May 1989). "Physicists Debunk Claim Of a New Kind of Fusion". New York Times . Retrieved iii February 2017.

- ^ Cheney, Margaret (1999), Tesla, Master of Lightning, New York: Barnes & Noble Books, ISBN 0-7607-1005-viii, pp. 107.; "Unable to overcome his financial burdens, he was forced to shut the laboratory in 1905."

- ^ Dominus, Susan (Oct 18, 2017). "When the Revolution Came for Amy Cuddy". New York Times Magazine.

Further reading [edit]

- Timmer, John (October 2006). "Scientists on Science: Reproducibility". Ars Technica.

- Saey, Tina Hesman (Jan 2015). "Is redoing scientific enquiry the best mode to find truth? During replication attempts, too many studies neglect to pass muster". Scientific discipline News. "Science is not irrevocably broken, [epidemiologist John Ioannidis] asserts. Information technology just needs some improvements. "Despite the fact that I've published papers with pretty depressive titles, I'chiliad really an optimist," Ioannidis says. "I find no other investment of a lodge that is better placed than scientific discipline.""

External links [edit]

- Transparency and Openness Promotion Guidelines from the Center for Open Science

- Guidelines for Evaluating and Expressing the Doubt of NIST Measurement Results of the National Institute of Standards and Technology

- Reproducible papers with artifacts past the CTuning foundation

- ReproducibleResearch.cyberspace

Source: https://en.wikipedia.org/wiki/Reproducibility

0 Response to "When an Experiment Produces the Same Results Again and Again"

Post a Comment